At CES 2026, Razer offered a glimpse into what AI-powered wearable computing could look like in the near future. The company unveiled a new concept wireless headset called Project Motoko. It blends vision, audio, and intelligence into a single, lightweight device.

Project Motoko is powered by Snapdragon platforms and is designed to work with multiple AI systems rather than being locked to one ecosystem. It is compatible with popular AI platforms like OpenAI, Gemini, and Grok. Razer says this flexibility is key to making wearables more useful in everyday life, not just for gaming.

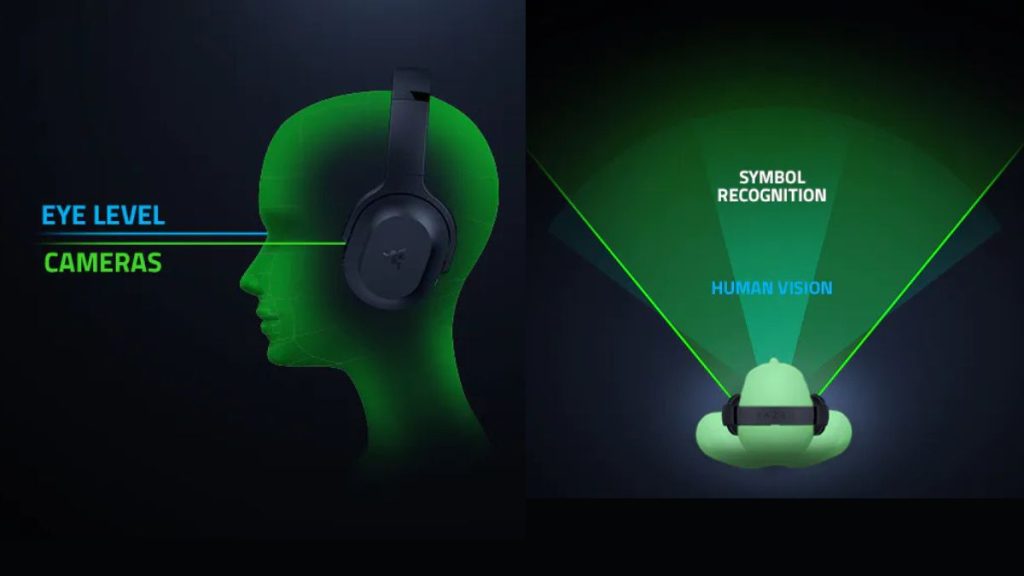

The device is built around real-time awareness. The headset uses dual cameras placed at eye level, which allows it to see the world from the user’s point of view. These cameras can recognize objects, read text, and understand surroundings instantly. In practical terms, this could mean translating signs while traveling, summarizing documents at work, or tracking workout movements at the gym.

Razer also highlights Motoko’s advanced visual perception. The system captures depth and details beyond normal peripheral vision. It helps users stay aware of their environment while multitasking.

Motoko features multiple microphones that handle both near-field and far-field sound. This allows it to pick up voice commands clearly while also understanding environmental sounds and conversations within view.

The headset provides instant audio feedback and acts as a hands-free AI assistant. It can respond in real time and adapt to user habits, schedules, and preferences. Razer says this makes Motoko suitable for commuting, gaming, or working without breaking focus.

Razer also sees Motoko as a tool for machine learning research. By capturing real human point-of-view data, including focus and depth, the headset could help train humanoid robots to better understand how people see and interact with the world.

For now, Project Motoko is a concept device. Razer has not shared details about pricing or a commercial launch timeline.