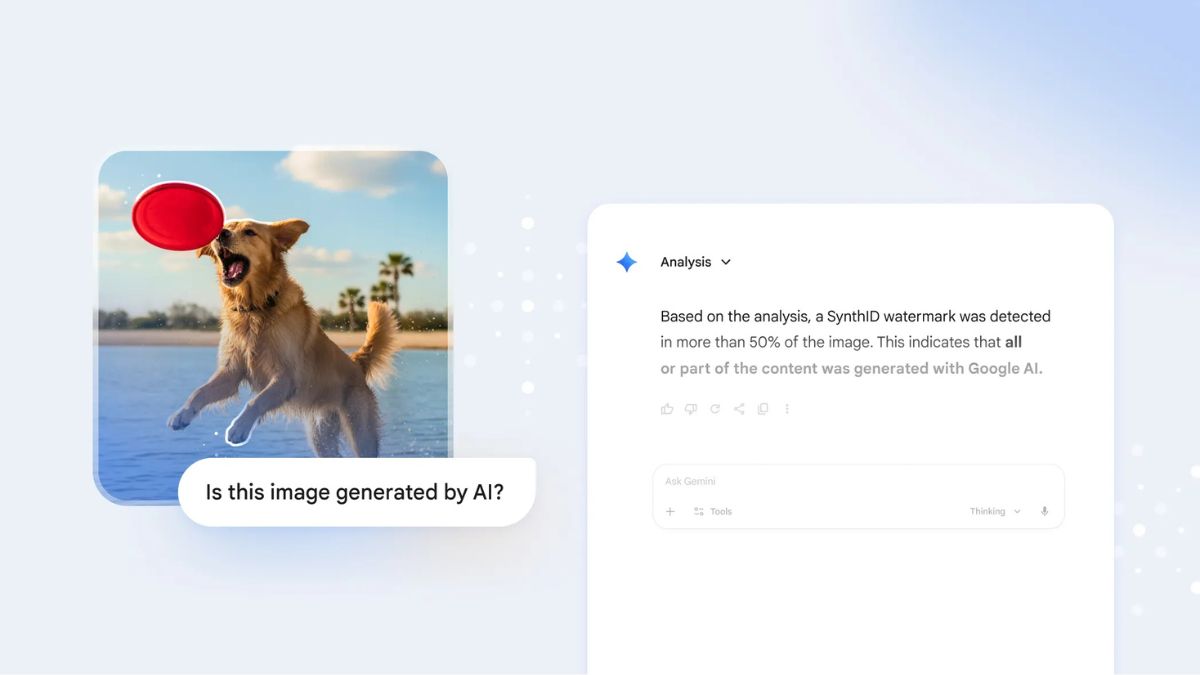

Google has announced a major update for Gemini aimed at bringing more transparency to the world of AI-generated images. As generative media becomes sharper, more realistic, and harder to distinguish from real visuals, Google is rolling out new tools to help users verify whether an image was created or edited using AI. This update is now live inside the Gemini app and uses Google’s own digital watermarking technology, SynthID.

Google has been working on content authenticity for years, but the rise of generative AI has made this work more important than ever. The company says its goal is simple: give people clear context about the images they see online. Whether you are scrolling through social feeds, reading the news, or checking forwarded images in chats, knowing what is real and what is AI-generated is becoming essential.

Now, anyone can upload an image into the Gemini app and ask a direct question like “Was this created with Google AI?” Gemini will check for a SynthID watermark and use its reasoning to explain whether the image was generated or edited using Google’s AI tools. SynthID watermarks are invisible to the human eye and cannot be removed without damaging the image, making them a strong signal for verification.

Google first introduced SynthID in 2023, and it has already been used on more than 20 billion pieces of AI content. The company has also been testing its SynthID Detector with journalists and media professionals to help fight misinformation and deepfakes. Now, this capability is available to everyone.

However, this is just the beginning. Google is already planning to expand the verification system to support more formats, including video and audio, and eventually bring verification tools to places like Google Search. The company is also exploring more features through projects like Backstory from Google DeepMind, which aims to help users understand the origins of media they encounter.

Also read: Quillbot’s Free AI Detector to detect AI written content